Fluently Project: Real-Time Biosignal Monitoring for Human-Robot Collaboration

Abstract

The Fluently Project represents a paradigm shift in human-robot collaboration within industrial environments, aiming to optimize productivity while safeguarding worker well-being. By integrating advanced neurotechnology with AI-driven decision-making, Fluently enables industrial robots to adapt in real-time to an operator's cognitive and physical state.

Through a novel human-machine interface, operators can command robots using natural speech and gestures, while their mental and physiological conditions, such as cognitive load, are assessed via wearable technology like the Bitbrain Versatile Bio Amplifier for biosignal monitoring, and the Bitbrain Diadem 12ch EEG headset, for EEG recordings.

The University of Applied Sciences and Arts of Southern Switzerland (SUPSI) contributions include the development of a privacy-preserving, multimodal pipeline capable of real-time mental state inference and adaptive robot behavior based on collaborative learning frameworks.

This blog post explores the project’s objectives, technological infrastructure, and real-world applications, emphasizing how Fluently sets new standards for ethical, efficient, and human-centric automation.

Introduction

MultiPhysio‑HRC Article Cover

MultiPhysio‑HRC Article Cover

Fluently Project aims to take the human-robot interaction in industrial settings a step further. The goal is to leverage the latest advances in AI-driven decision-making to achieve true social collaboration between humans and machines in extremely dynamic manufacturing contexts. One key aspect of social partnership is understanding the other.

The state of your collaboration has a major impact on the actions that can be performed or executed. Human-robot content, speech tone, gestures, facial expressions, and physical or cognitive load are factors that can be used to determine the general status of the human and then modulate the workload or pace accordingly.

EEG and other biosignals, such as ECG or pulse oximeter-based metrics, are key to determining the state of the human. Compared to face or speech recognition and analysis, biosignals cannot be consciously altered by the person. For instance, people can lie or speak ironically or force a face to hide their real state. Biosignals are objective measurements that encode variables such as the physical load or the cognitive effort that is being exerted during the execution of a task.

Fluently VR Scenario with Bitbrain Diadem EEG Headset

Fluently VR Scenario with Bitbrain Diadem EEG Headset

Exploring the Fluently Project

Objectives and challenges

In the modern manufacturing industry, robots bring greater precision, strength, and endurance to manufacturing tasks than humans do. At the same time, humans bring more expertise, judgment, and foresight. The goal of human-robot interaction in a smart industry is to integrate and simultaneously elevate the complementary abilities of humans and robots as they work together to achieve a common goal. The Fluently EU project aims to improve the current state of human-robot interaction in industrial sectors like manufacturing. To achieve this, the Fluently team is developing a human-machine interface that allows robots to better interpret the human colleague’s needs. The Fluently framework leverages the latest advancements in AI-driven decision-making processes to achieve true social collaboration between humans and machines, while matching extremely dynamic manufacturing contexts.

In this context, the two authors of this post have participated, together with other authors, in the publication of the article “MultiPhysio-HRC: A Multimodal Physiological Signals Dataset for Industrial Human–Robot Collaboration.” The goal of this work is to provide a multimodal dataset designed to investigate human psychophysiological states—such as stress and cognitive load—in realistic human–robot collaboration (HRC) scenarios within the framework of Industry 5.0 [5].

framework features:

- Interpretation of speech content, speech tone and gestures, automatically translated into robot instructions, making industrial robots accessible to any skill profile

- Assessment of the operator’s state through a dedicated sensors infrastructure, to enrich an AI-based behavioral framework that allows robots to adapt their assistance strategies based on the operator's condition

- Modelling products and production changes in a way they could be recognized, interpreted and matched by robots in cooperation with humans. By doing so, Fluently ensures that the collaboration enhances the operator’s capabilities without overwhelming them.

Fluently introduces two key elements:

- The Smart Interface: This interface allows operators to effortlessly command robots using speech and gestures, adapting robot behavior in real-time to operator conditions, and optimizing performance.

- The Robo-Gym: A training and testing facility where humans and robots learn to collaborate. This environment helps robots and operators to adapt seamlessly to each other, improving cooperation and efficiency.

The challenges the Fluently project is tackling are:

- Introducing robotics in value chains that are heavily relying upon human-based activities, by training people with no knowledge of automation to smoothly cooperate with a robot

- Pushing towards zero scraps and zero production downtimes, by reducing the physical and cognitive load of the human operator, thanks to robot behaviors tailored for the very human operator with whom they work

- Reducing ramp-up time in matching production changes, such as part types, variants, volumes, and mix ratio, using Artificial Intelligence (AI) to enable recognition, classification, and inference on new process recipes and strategies.

Potential impact of a project involving robotics and human interaction (implications for health, rehabilitation, and industry)

Human workers in the manufacturing industry are placed in high-stress situations while executing massively complex work with limited resources. In these scenarios, the value of automation is not just about optimizing quality and resources, but also about preserving the workers’ well-being. The level of cognitive and physical load is constantly fluctuating as a response to the production requirements. Robots equipped with Fluently will constantly evaluate humans’ physical and cognitive loads, learn, and build experience with their human teammates, to establish a manufacturing practice relying upon quality and human operators’ well-being. This innovative synergy is designed to alleviate the cognitive and physical burdens on operators, enhancing both efficiency and safety [1]. No industrial robot is currently capable of interpreting such a context and deliberating in autonomy to target quality and productivity while caring for the human workers. Fluently sets new standards in manufacturing, where technology enhances human capabilities and well-being, establishing a new era of co-operative industrial innovation.

In this video of Fluently training experience, a participant, equipped with the Bitbrain Diadem EEG headset and the Bitbrain Versatile Bio Amplifier, is playing a VR game (Richie's Plank Experience), where he must walk across a plank suspended above a building. This task helps to elicit high levels of stress and arousal.

SUPSI's role in the Fluently Project

Contributions and milestones achieved

To tackle the challenges of the Fluently project, the SUPSI team designed and conducted an ad-hoc experimental campaign. This campaign was designed around the project’s industrial use cases and involved multiple participants performing the task by directly collaborating with the industrial robot. During the collaboration, psychological questionnaires were administered, and multiple physiological signals were collected to map the perceived states of the operators to their physiological responses. This data was used to develop machine learning models that will be used on the H-Fluently device. SUPSI leveraged the wide variety of modalities and sensors collected to develop a multimodal approach [2] to reliably predict the mental state of the operator.

The Fluently App Interface

With the support from Bitbrain, SUPSI developed a pipeline to acquire and process physiological data coming from the Versatile Bio and the Diadem EEG directly on the H-Fluently device. This involves multiple steps that include online signal processing and filtering, feature extraction, and model inference. The model runs inference directly on the mobile device and provides direct feedback to the operator using the Fluently app interface.

The participant, wearing the Bitbrain Diadem EEG headset and Bitbrain Versatile Bio Amplifier, directs the robot to perform tasks in the Fluently Project training experience.

This pipeline was integrated into a “privacy-preserving” framework to safeguard the operator’s sensitive data. This framework involves Federated Learning (FL), which allows collaborative model training across decentralized devices without compromising data privacy [3]. Using FL, we can fine-tune the machine learning model on-device on subject-specific data to boost its inference capacity for that specific person. At the same time, the global model is continuously improved by aggregating the model weights from individual personalized models, without ever receiving sensitive data.

Based on the prediction of human mental state, we developed the adaptive behaviors that the robot learns from direct and on-the-field collaboration with the human teammate. In this way, the robot can learn the preferences of individual human colleagues and act accordingly to alleviate their psychophysical load.

Case Study: Bitbrain Versatile Bio Amplifier and the Bitbrain Diadem 12ch EEG headset

Overview of the devices: key features and specifications

Bitbrain’s Versatile Bio Amplifier and Diadem EEG are portable, research-grade neurotechnology devices designed for high-quality data acquisition in real-world conditions. The Versatile Bio Amplifier records multiple physiological signals (such as ECG, EMG, GSR, respiration, temperature, and motion) with precise synchronization, making it ideal for multimodal studies of human behavior.

The Diadem EEG is a lightweight, dry-electrode EEG headset that captures brain activity quickly and comfortably without gels, enabling fast setup and reliable monitoring of cognitive and emotional states. Together, they provide flexible, multimodal mobile solutions for neuroscience, human-factors research, and applied neurotechnology across lab and out-of-lab environments.

In the left, Bitbrain Diadem 12ch EEG. In the right, the Bitbrain Versatile Bio Amplifier

In the left, Bitbrain Diadem 12ch EEG. In the right, the Bitbrain Versatile Bio Amplifier

Integration of Bitbrain Versatile Bio and Diadem EEG into the Fluently Project and their contributions to its progress

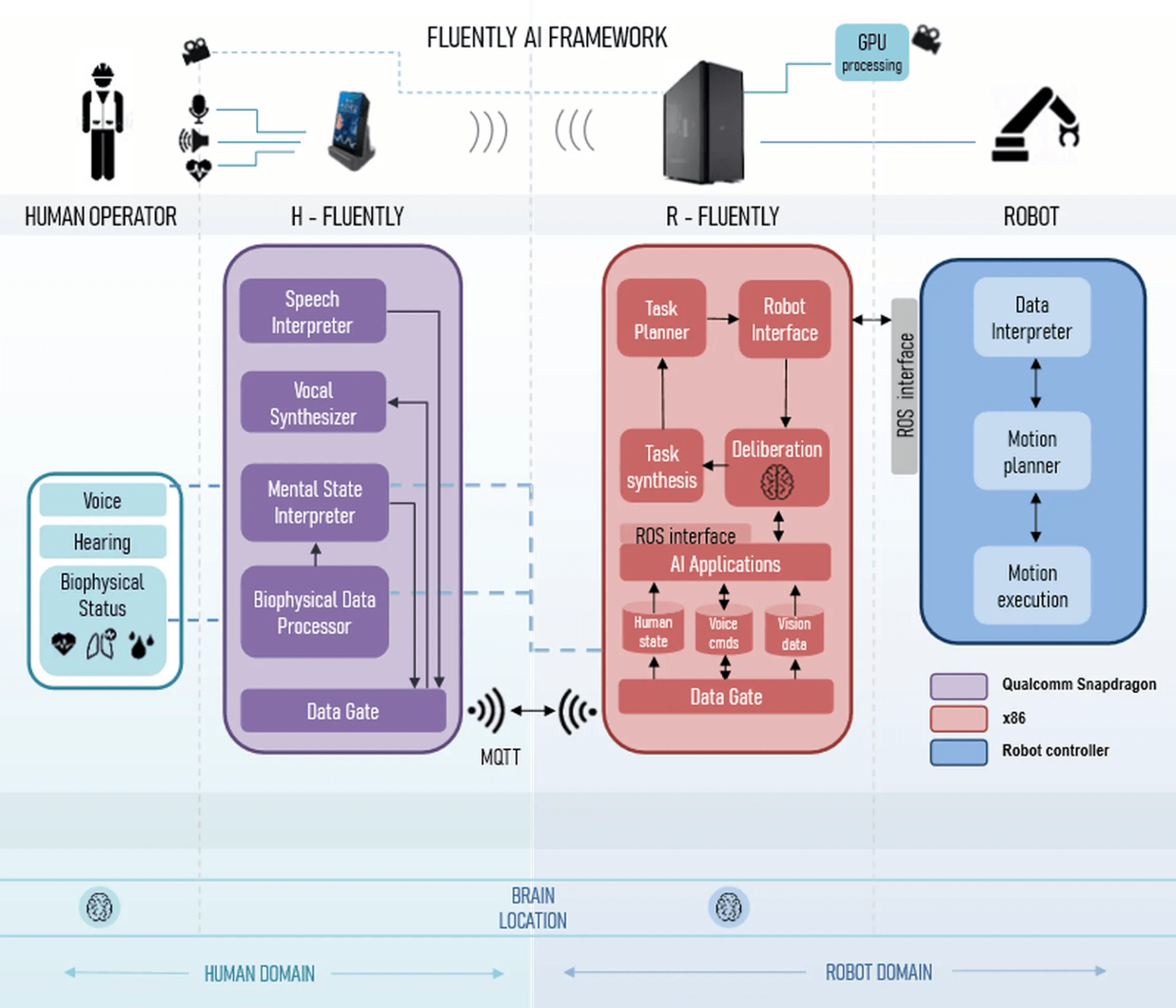

The components of the Fluently AI framework are distributed across multiple devices. The flow of information moves from H-Fluently, where physiological data are collected from the human operator and processed on-device, to R-Fluently, where the main robot intelligence components are deployed, ending with communication with the robot controller to execute motion commands.

The Versatile Bio and the Diadem EEG device are integrated on the H-Fluently device using the Bitbrain SDK. The devices allow for real-time data acquisition, which is the first step to assessing the human psychophysical state.

Bitbrain’s Versatile Bio and Diadem EEG devices were easy to use and worked well in an industrial setting, proving that EEG is a proper tool for this kind of scenario. Their lightweight, wireless design lets operators move freely without getting in the way of their tasks. Integration with the Fluently system through the Bitbrain SDK made it simple to collect and process physiological data in real-time on the H-Fluently device. The sensors provided accurate, reliable data, which was key to detecting the mental state of the operator. Overall, the devices were comfortable for users and practical for use in a real factory environment.

Fluently AI Framework

Fluently AI Framework

Conclusion

The Fluently Project demonstrates how the integration of biosignal monitoring and intelligent robot behavior can revolutionize human-robot interaction in industrial settings. Devices like Bitbrain's Versatile Bio and Diadem EEG have proven essential for accurately assessing operator state and enabling real-time adaptations. SUPSI’s multimodal processing and privacy-preserving machine learning frameworks allow for robust and ethical deployment of these technologies.

As neurotechnology and collaborative robotics continue to evolve, Fluently paves the way for future industrial systems where robots are not mere tools, but empathetic partners, enhancing both human well-being and operational efficiency.

About the authors

Andrea Bussolan is a PhD student at the Automation, Robotics, and Machines (ARM) lab from SUPSI’s Department of Innovative Technologies, where he focuses on human–robot collaboration, cognitive and physiological workload assessment, and real-world multimodal data acquisition. His work combines robotics, ergonomics, and neuroscience, using EEG and biosignals to study how stress and cognitive load affect operators during industrial human-robot collaborative tasks. He also explores privacy-preserving AI techniques such as federated learning to develop personalized models for safer and more adaptive human–robot interaction. Research Gate Profile, Google Scholar, Linkedin.

Pablo Urcola Irache is a researcher with a PhD in Systems Engineering and Computer Science, specializing in robotics. With more than fifteen years of experience in national and international R&D projects, he has focused on developing technologies with real impact. As a software engineer, he applies his background in systems and robotics to advance neurorehabilitation, thought-controlled robotic prototypes that respond to brain activity, bringing neurotechnology closer to end users, facilitating its deployment at home and in real-world environments. Research Gate Profile, Google Scholar, Linkedin.

References

[1] A. Valente, G. Pavesi, M. Zamboni, e E. Carpanzano, «Deliberative robotics – a novel interactive control framework enhancing human-robot collaboration», CIRP Annals, vol. 71, fasc. 1, pp. 21–24, 2022, doi: 10.1016/j.cirp.2022.03.045.

[2] A. Bussolan, S. Baraldo, L. M. Gambardella, e A. Valente, «Multimodal fusion stress detector for enhanced human-robot collaboration in industrial assembly tasks», in 2024 33rd IEEE International Conference on Robot and Human Interactive Communication (ROMAN), ago. 2024, pp. 978–984. doi: 10.1109/RO-MAN60168.2024.10731373.

[3] P. Kairouz et al., «Advances and Open Problems in Federated Learning», 8 marzo 2021, arXiv: arXiv:1912.04977. Consultato: 11 novembre 2022. [Online]. Disponibile su: http://arxiv.org/abs/1912.04977

[4] Avram O., Human expertise and robotic precision: how the University of Applied Sciences and Arts of Southern Switzerland uses Qualcomm AI Hub, https://www.qualcomm.com/developer/blog/2024/11/university-applied-sciences-arts-southern-switzerland-qualcomm-ai-hub

[5] Bussolan, A., Baraldo, S., Avram, O., Urcola, P., Montesano, L., Gambardella, L. M., & Valente, A. (2025). MultiPhysio-HRC: A Multimodal Physiological Signals Dataset for Industrial Human–Robot Collaboration. Robotics, 14(12), 184. https://doi.org/10.3390/robotics14120184